Artificial Intelligence (AI)-powered eLearning platforms have emerged as a transformative force, promising personalized and efficient learning experiences. Adaptemy, a leading player in this field, harnesses the power of AI to provide cutting-edge eLearning solutions. But how does Adaptemy ensure the effectiveness of its AI-powered prototypes? The answer lies in comprehensive learning analytics.

“How do you evaluate prototypes?” is a question we have been asked more times than we care to remember: And with good reason.

Any organisation considering upgrading to use AI-Powered technologies and processes need to justify the ROI. They need evidence (not vague marketing conjecture) to prove the efficacy of the project they have invested their time and money into.

Adaptemy employs a multi-faceted framework for the analysis of prototypes. This includes, but is not limited to:

- Expert Review – Evaluation of the prototype by respective Subject Matter Experts against benchmark criteria (KPI’s)

- Project Management Review – What worked and what do we need to improve?

- User Satisfaction Surveys – Users complete satisfaction surveys (delivered in-app or externally) to gather qualitative feedback.

- Technical Performance Review – These metrics pertain to system security, uptime, stability and performance.

- Learning Analytics – The hard data that proves the key learning goals were met or exceeded.

The first four are, frankly, what any project leader ought to expect from a prototype. The fifth part of the framework, Learning Analytics, is the focus of today’s article. Led by Dr. Ioana Ghergulescu…. this is a summary only:

Understanding the Importance of Learning Analytics

Before delving into Adaptemy’s approach, let’s establish the significance of learning analytics in the world of eLearning. Learning analytics is the process of collecting, analyzing, and interpreting data from educational platforms to optimize learning outcomes. It provides valuable insights into student performance, engagement, and the efficacy of the learning materials. These insights are essential for fine-tuning eLearning systems, making them more adaptive and effective.

Monthly Analytics Report: A Peek into Adaptemy’s Evaluation Process

Adaptemy’s commitment to delivering top-notch eLearning experiences is reflected in its robust evaluation process, which is guided by a monthly analytics report. This report, designed primarily for the “Project Leadership Team” consisting of Learning Designers, Data Scientists, and Product Owners, offers a comprehensive overview of the AI-powered eLearning prototype’s performance. Here’s an introduction into some key aspects covered in this report:

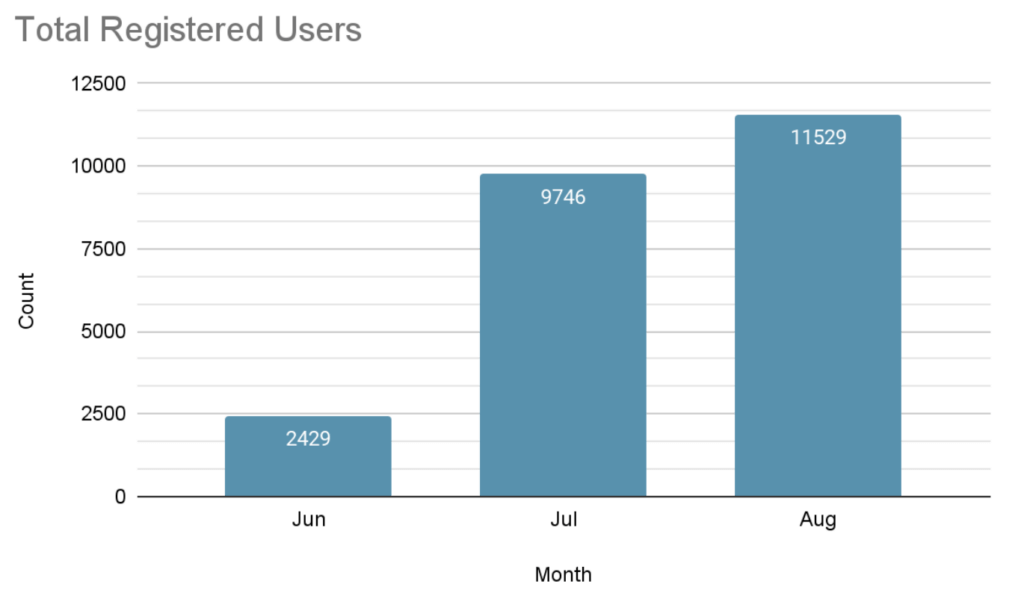

1. System Usage

Adaptemy provides clients with detailed analytics on system usage, allowing segmentation by user type, time, and specific features. This data is crucial for understanding how various user groups engage with the platform. For instance, it can distinguish between the activities of lecturers, administrators, teachers, and students, while also analyzing usage patterns based on factors like time of day and day of the week.

System usage metrics serve as a universal benchmark for gauging product adoption and success. By tracking usage trends, Adaptemy can either affirm client confidence in the product or identify areas that require further analysis and remediation.

2. Model Accuracy

Model accuracy is a pivotal aspect of Adaptemy’s evaluation process. It is assessed using the industry-standard metric known as AUC (Area Under Curve). In essence, AUC measures the accuracy of the Adaptemy Engine in making probabilistic predictions about future student performance based on accumulated data from the learner model.

A high AUC score is indicative of a high-quality solution. It signifies that the independent system models, including the Curriculum Model, Learner Model, and Content Model, are accurate. Adaptemy constantly strives to improve AUC through machine learning and iterative enhancements.

[image]

3. Curriculum Model Analysis

The Curriculum Model plays a significant role in eLearning, as it defines the pre and post-requisite relationships of knowledge units or “Concepts” in a course. Adaptemy’s evaluation involves validating these initial assumptions through machine learning based on real user data.

Curriculum-level insights become statistically relevant only after a considerable number of users have completed the course. Adaptemy uses this analysis to recommend adjustments such as adding or removing links, strengthening or weakening links, and revising or authoring new concepts, all aimed at enhancing the learning experience.

[image]

4. Assessment Content Model Analysis

Assessment items are integral to evaluating student mastery. Adaptemy conducts question-level analysis to assess the quality of assessment items. Two key metrics under examination are the Discriminant Value, indicating the quality of a question, and Challenge Level, which assesses the alignment between perceived and actual question difficulty.

High-quality assessment items are crucial for effective learning. Adaptemy’s evaluation process flags questions with low discriminant values or significant difficulty variances for review, reducing the need for user complaint analysis or costly annual reviews.

[image]

5. Instructional Content Model Analysis

The performance of instructional content is a focal point in Adaptemy’s evaluation process. By correlating the subsequent pass rate of learners after consuming instructional content, Adaptemy provides valuable data for content improvement.

Low pass rates trigger the flagging of specific content for review by the client’s editorial team. This data-driven approach allows clients to make evidence-based, cost-effective enhancements to their product.

[image]

6. Learning Gain Analysis

Learning gain is a core metric for assessing the effectiveness of eLearning solutions. Adaptemy analyzes learning gain across different segments, such as student ability and course level. This analysis helps validate how effectively the product supports learners of varying abilities and assesses the impact of the eLearning system on student progress.

Products that cannot demonstrate transparent and convincing learning gains face challenges in justifying their return on investment. Adaptemy’s evaluation process ensures the product’s ability to showcase the benefits of eLearning.

[image]

7. Learning Path Analysis

Understanding how students progress through learning paths is crucial for optimizing eLearning experiences. Adaptemy evaluates the completion rates of different learning strategies and course levels. This analysis helps identify issues such as poor engine recommendations, user experience problems, or content-related issues. Low completion rates signal potential problems in the eLearning journey, and Adaptemy uses data-driven insights to inform remediation strategies.

[image]

8. Self-Efficacy Estimations

Beyond mastery of content, Adaptemy’s evaluation process also considers learner traits, such as self-efficacy. Self-efficacy refers to an individual’s belief in their capacity to achieve specific goals. Adaptemy models self-efficacy as part of the learning experience and monitors it through Likert scale ratings.

Clients often have strategic goals related to fostering desirable learner traits like self-efficacy. Adaptemy’s data analysis helps clients understand how their product impacts these traits and provides valuable insights for improvement.

[image]

Conclusion

Adaptemy’s commitment to excellence in eLearning is underpinned by a rigorous evaluation process driven by comprehensive learning analytics. By continuously monitoring and analyzing key metrics related to system usage, model accuracy, curriculum, assessment content, instructional content, learning gain, learning paths, and self-efficacy, Adaptemy ensures that its AI-powered eLearning prototypes deliver optimal outcomes for learners and clients alike.

In the dynamic world of eLearning, where adaptability and effectiveness are paramount, Adaptemy’s data-driven approach is a testament to its dedication to providing cutting-edge educational solutions that empower learners and drive success. To learn more about our extensive research in this area [link: double blind test blogpost]

> If your team is committed to improving learning and training with AI, you can book a virtual meeting with Adaptemy here: