How do you know that a learning product makes a difference?

Finding an objective and comparable way to measure this is essential to good decision making. Not only for courseware designers, but also for teachers and schools in deciding which solutions to adopt.

Luckily for the industry, this problem has been thoroughly addressed by the What Works Clearinghouse at the National Centre for Education Evaluation, part of the Institute of Education Science.

They have provided a framework and detailed procedures to ensure that claims of effectiveness must meet certain standards.

Randomised Controlled Trials

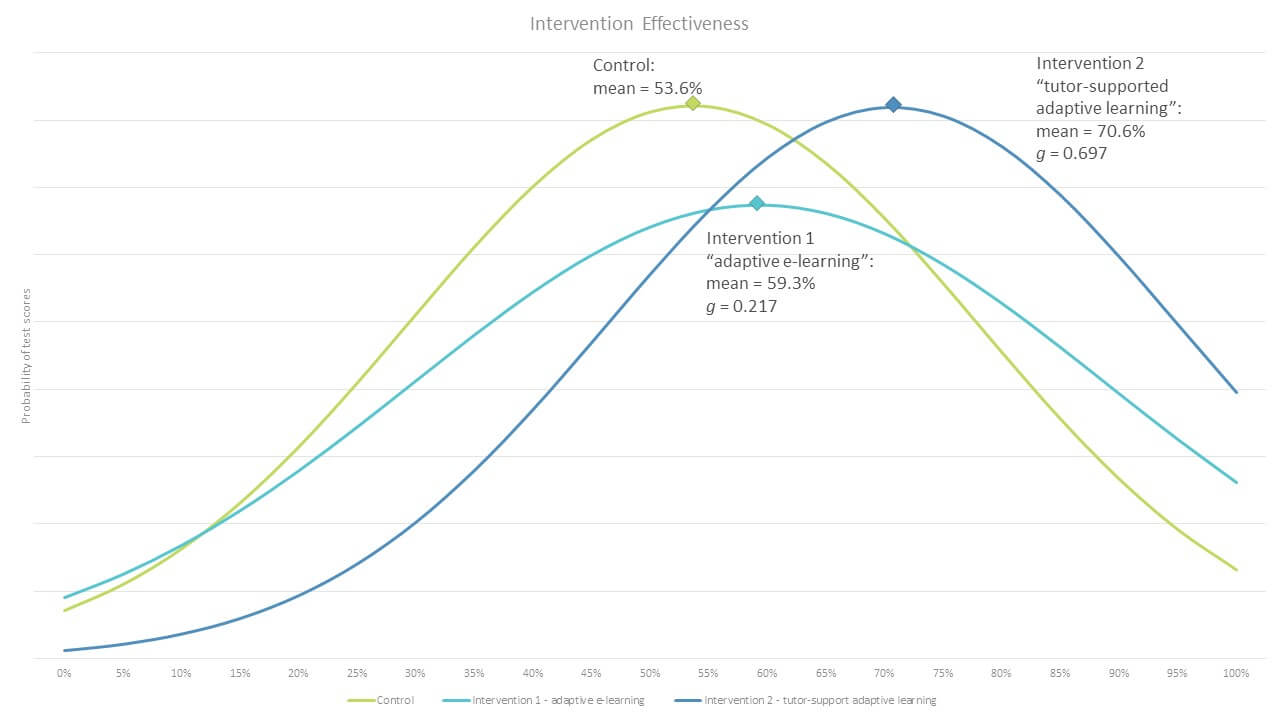

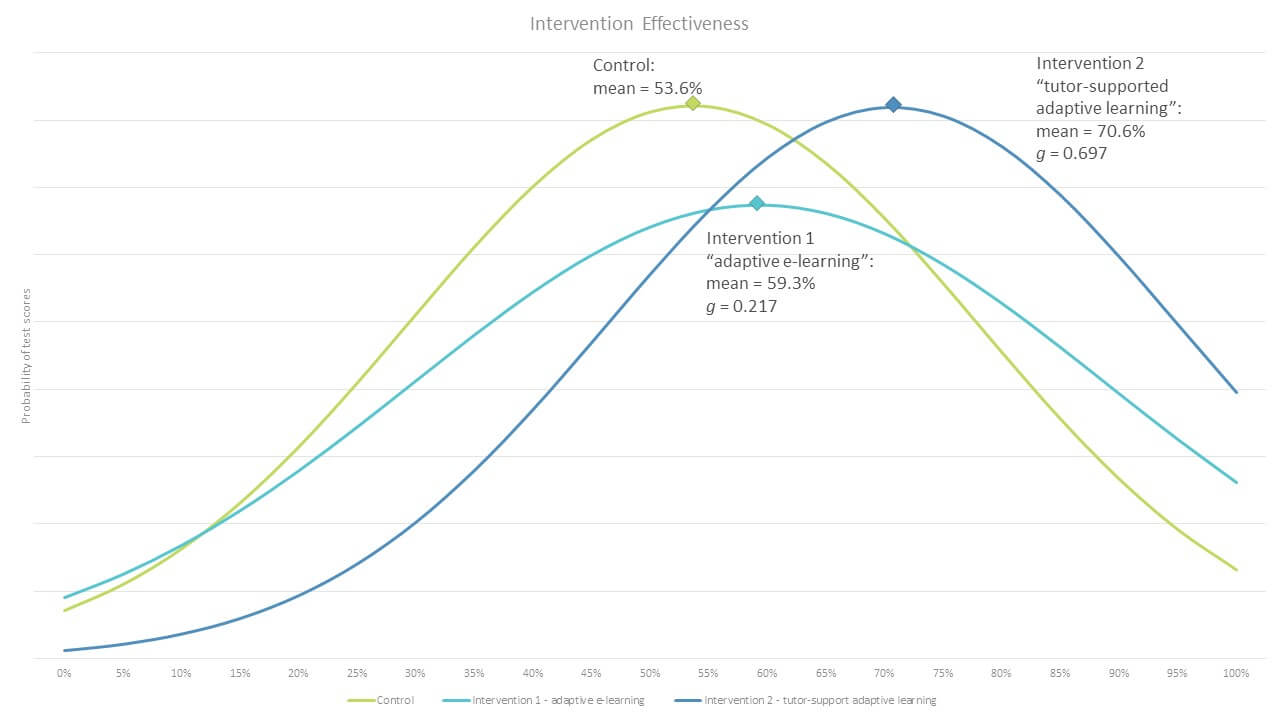

To measure how effective a learning solution is, a comparison is made between students who do not experience the intervention (the “control group”) and students who do. Students must be randomly assigned to either group. All students are assessed before the trial (the “pre-test”) and again after the trial (the “post-test”). Further details on the trial procedure are available in the Procedures Handbook.

The impact of the learning intervention is measured as the “effect size” or “improvement index”. An improvement index is usually described as the average increase in percentage points of a student’s score e.g. +8 would mean that a student who previously scored 65% in a test would now expect to increase their score to 73% .

The “corrected effect size” (Hedges’ g) takes into account the sample size and the standard deviation, and is a more comparable measure. The effect size is usually described as the average increase in standard deviations of the score e.g. +0.21 would mean that the intervention leads to an increase in the average score by 0.21 times the standard deviation of the test scores.

Adaptemy & Trinity College Dublin

After our very first adaptive learning product launch, Adaptemy commissioned the School of Education at Trinity College Dublin, Ireland’s most prestigious university, to conduct an evaluation of the effectiveness of two learning products powered by Adaptemy technology.

- Adaptive exercising

- Tutor-support adaptive learning

Adaptive exercising: a short self-paced adaptive exercising solution, designed for use after a teacher has previously covered the topic in class. Exercises provided immediate feedback to the students, difficulty level was dynamically adjusted to keep student in a flow state, and misconceptions were highlighted to the student.

Tutor-support adaptive learning: similar to the Adaptive e-learning product, but students also attended weekly group online tutorials; students were divided into group of 4 based on their individual learner profiles, and the analytics provided guidance to the tutors on areas of focus.

Results

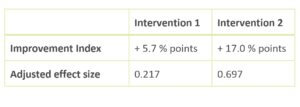

Students would expect an increase of 6 percentage points after using intervention 1, and an increase of 17 percentage points after using intervention 2.

Especially notable: for weaker students, scoring less than 50% on the initial test, intervention 2 made a very significant difference, raising the expected scores of these weaker students by 27 percentage points.

Other measures

Randomised Controlled Trials are expensive tests to conduct, involving the large-scale organisation of willing control groups and test subjects, pre- and post-tests, and academic validation. Many other “in-line” measures of learning effectiveness are also possible. See some of our published papers here:

- An Evaluation Framework for Adaptive and Intelligent Tutoring Systems

- How Effective is Adaptive Learning? What Are the Results?

- Learning Effectiveness of Adaptive Learning in Real World Context

- Effective Learning Recommendations Powered by AI Engine

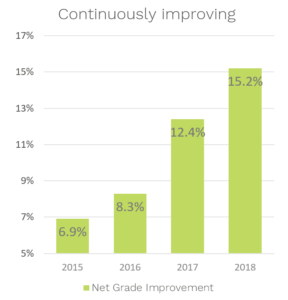

Increase in effectiveness over time

The above RCT study was carried out in early 2015, showing an Improvement Index on a single topics of an adaptive exercising product of +6% points. Over the last 4 years, this Improvement Index has increased to 8.3% in 2017, 12.4% in 2018, and to 15.2% points in 2019!

References

Procedures for Randomised Control Trials: https://ies.ed.gov/ncee/wwc/Handbooks

Further details on the statistical calculations and issues can be found at: https://academic.oup.com/jpepsy/article/34/9/917/939415